PRSA releases new guidelines on ethical AI use in PR

The organization sees opportunity for PR pros to act as an “ethical conscience” and combat disinformation throughout AI development and applications.

The Public Relations Society of America has released a new set of ethics guidelines to help PR professionals make informed, responsible choices in the fast-moving world of artificial intelligence.

“There are lots of opportunities with AI. And while we’re exploring those opportunities, we need to look at how we can guard against misuse,” said Michelle Egan, PRSA 2023 chair.

Potential roadblocks

ChatGPT was released just over a year ago. Even since January of this year, Egan has seen significant changes in PRSA members’ attitudes toward generative AI.

“People said to me, ‘it feels like cheating,’” Egan recalled. “To now, ‘oh, I can see how starting with one of these tools … gives me a little bit of a running start and lets me put more time into the higher order things so that I can do strategic thinking.”

It’s likely that this guidance will evolve as the tools do. But for now, when she looks to the future, Egan anticipates more technological growth — but also potential pitfalls.

As we move into a U.S. election year, she expects growing polarization to only add to the swell of mis- and disinformation, much of it driven by the rapid advancement of AI tools.

But she also sees the potential for members of the profession to drive real change.

“We have the opportunity to really educate across the board, to other professions and the C suite about the challenges there and how to prepare and how to prepare for it.”

How the guidance was developed

At the beginning of 2023, Egan asked committees what their top concerns were for the year ahead. The answer was resounding, Egan said: AI and mis- and disinformation.

The new guidance builds on PRSA’s existing Code of Ethics, which the organization places at the center of its mission. It was developed by the PRSA AI Workgroup, chaired by Linda Staley and including Michele E. Ewing, Holly Kathleen Hall, Cayce Myers and James Hoeft. The document is based on conversations with experts, other organizations’ guidance and the framework already provided by the PRSA’s code.

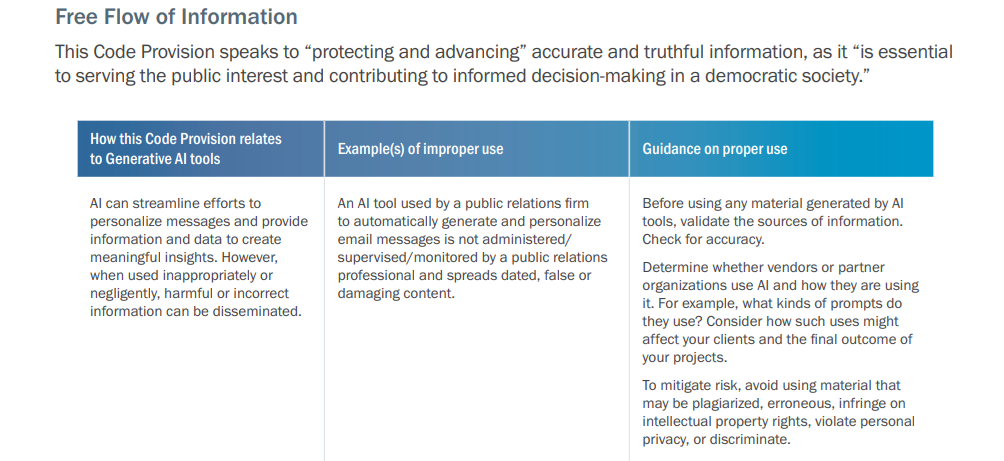

The document lays out its advice across a series of tables that walk readers through each provision of the PRSA’s ethics code, explains its connection to AI, potential improper uses or risks and ways to use AI ethically.

Egan said that additionally critical topics for communicators to consider right now are the potential for AI to spread disinformation and the biases that can be built directly into these powerful bots.

“When you’re using these models, you need to understand that the content comes from humans who have implicit bias, and so therefore, the results are going to have that bias,” Egan said.

Properly fact-checking and sourcing content that’s produced by AI and ensuring you aren’t taking credit for someone else’s work is also top of mind.

“To claim ownership of work generated through AI, make sure the work is not solely generated through AI systems, but has legitimate and substantive human-created content,” the guidance advises. “Always fact-check data generative AI provides. It is the responsibility of the user — not the AI system — to verify that content is not infringing another’s work.”

Egan stressed the importance of education at this phase in AI’s tech cycle — not just for practitioners, but also within organizations.

“We have to find our voice and speak up when there’s something that we truly think is unethical and not engage in it,” she said. The guidance document says PR professionals should be “the ethical conscience throughout AI’s development and use.”

Find the full AI guidance here.

Allison Carter is executive editor of PR Daily. Follow her on Twitter or LinkedIn.